I am Yingjie Chen, an AI Researcher at Alibaba TongYi Lab. I graduated with Ph.D. degree from the school of Computer Science, Peking University in 2024, supervised by Prof. Tao Wang and Prof. Yun Liang. Before that, I received B.S. degree from Peking University in 2019. My research focuses on Computer Vision, Affective Computing and AI Generative Content. At Alibaba, I’m working on generative AI models, especially cotrollable video generation.

We are now recruiting for Summer Internships, and positions for Research Interns (RI) are continuously open for applications. Welcome to contact me with your CV and research statement!

🔥 News

- 2025.06: 🎉 Perception-as-Control has been accepted by ICCV 2025.

- 2024.12: 🎉 Trend-Aware-Supervision has been accepted by AAAI 2024.

- 2023.10: 🎉 EventFormer has been accepted by BMVC 2023.

- 2023.05: 🎉 One paper has been accepted by SMC 2023.

- 2022.12: 🎉 CIS has been accepted by AAAI 2022.

- 2022.07: 🎉 On-Mitigating-Hard-Clusters has been accepted by ECCV 2022.

- 2022.07: 🎉 SupHCL has been accepted by ACM MM 2022.

- 2022.04: 🎉 HUMAN has been accepted by IJCAI 2022.

- 2021.07: 🎉 CaFGraph has been accepted by ACM MM 2021.

- 2021.07: 🎉 FSNet has been accepted by RA-L, 2021.

- 2021.07: 🎉 AUPro has been accepted by ICONIP, 2021.

📝 Selected Publications

Perception-as-Control: Fine-grained Controllable Image Animation with 3D-aware Motion Representation

Yingjie Chen, Yifang Men, Yuan Yao, Miaomiao Cui, Liefeng Bo

- We introduce 3D-aware motion representation and propose an image animation framework, Perception-as-Control, to achieve fine-grained collaborative motion control. By constructing 3D-aware motion representation based on various user intentions and taking the perception results as motion control signals, Perception-as-Control can be applied to various motion-related video synthesis tasks.

Yingjie Chen, Jiarui Zhang, Tao Wang, Yun Liang

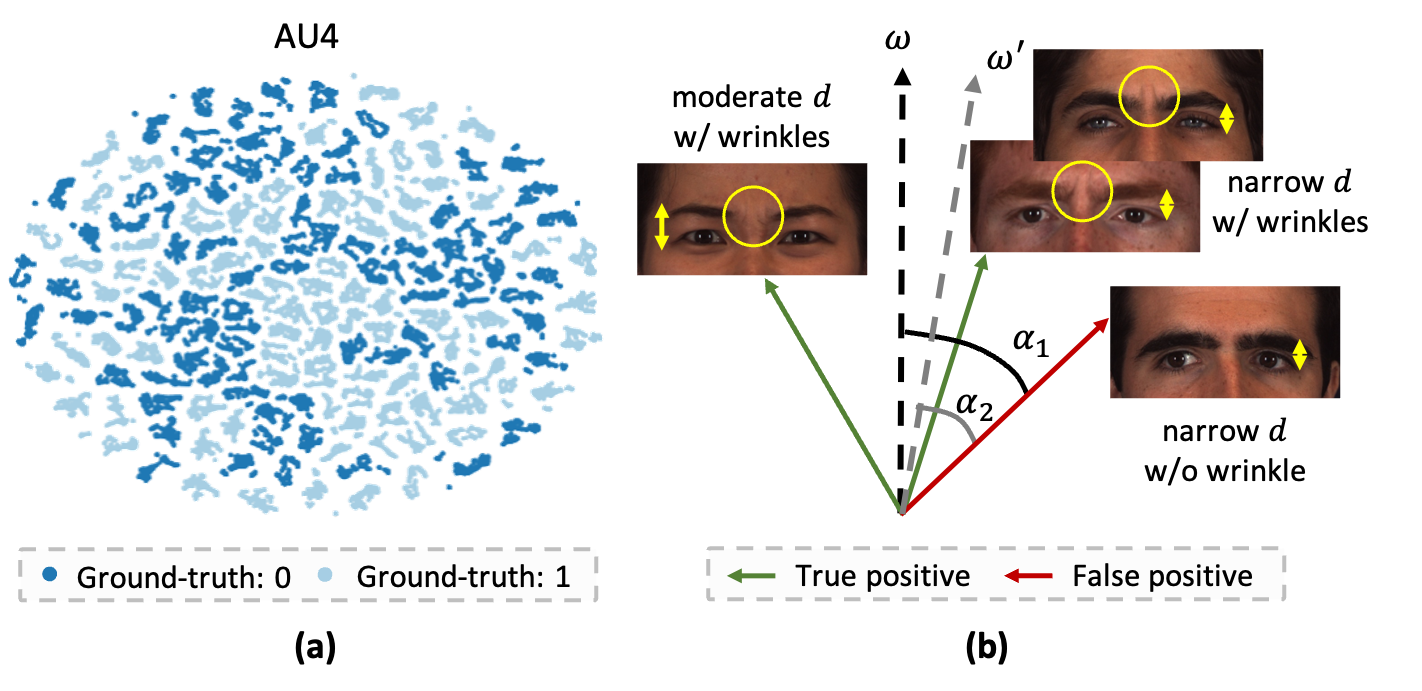

- We inspect the keyframe-based semi-supervised AU intensity estimation problem and identify the spurious correlation problem as the main challenge. To this end, we propose Trend-Aware Supervision to raise intra-trend and inter-trend awareness during training to learn invariant AU-specifc features.

On Mitigating Hard Clusters for Face Clustering

Yingjie Chen, Huasong Zhong, Chong Chen, Chen Shen, Jianqiang Huang, Tao Wang, Yun Liang, Qianru Sun

- We inspect face clustering problem and find existing methods failed to identify hard clusters—yielding significantly low recall for small or sparse clusters. To mitigate the issue of small clusters, we introduce NDDe based on the diffusion of neighborhood densities.

Yingjie Chen, Diqi Chen, Tao Wang, Yizhou Wang, Yun Liang

- We observe that there are three kinds of inherent relations among AUs, which can be treated as strong prior knowledge, and pursuing the consistency of such knowledge is the key to learning subject-consistent representations. To this end, we propose a supervised hierarchical contrastive learning method (SupHCL) for AU recognition to pursue knowledge consistency among different facial images and different AUs.

Causal Intervention for Subject-Deconfounded Facial Action Unit Recognition

Yingjie Chen, Huasong Zhong, Chong Chen, Chen Shen, Jianqiang Huang, Tao Wang, Yun Liang, Qianru Sun

- We formulate subject variant problem in AU recognition using an AU causal diagram to explain the whys and wherefores. Based on our causal diagram, we propose a plug-in causal intervention module, CIS, which could be inserted into advanced AU recognition models for removing the effect caused by confounder Subject.

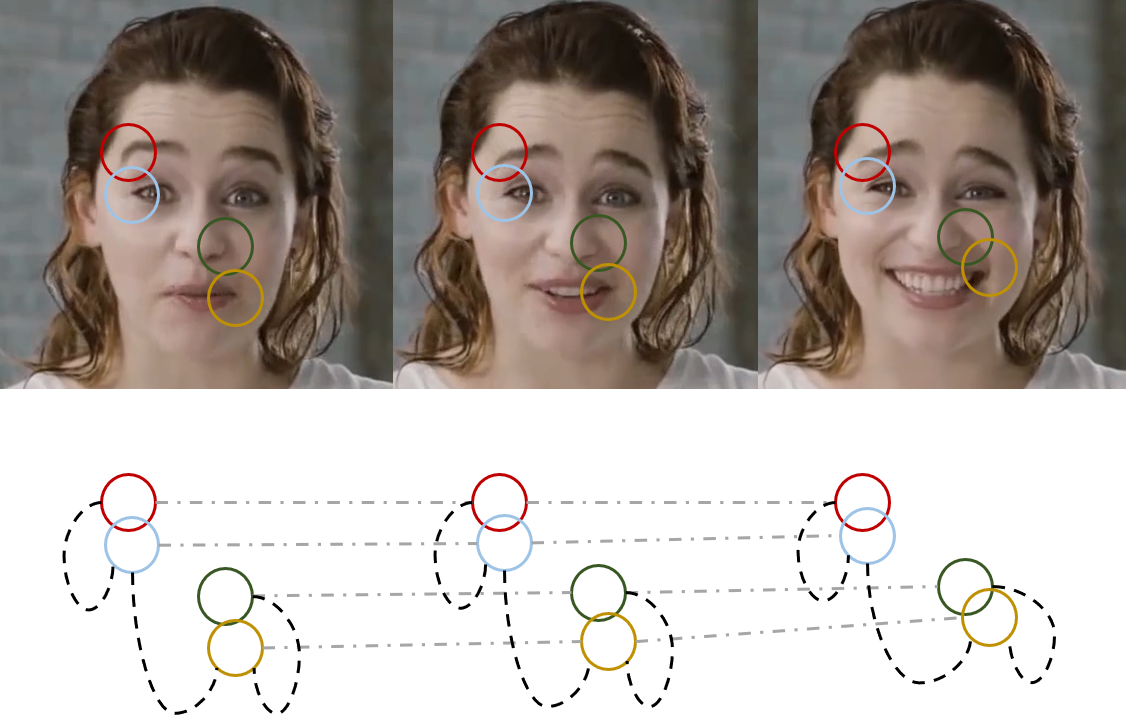

CaFGraph: Context-aware Facial Multi-graph Representation for Facial Action Unit Recognition

Yingjie Chen, Diqi Chen, Yizhou Wang, Tao Wang, Yun Liang

- Considering that context is essential to resolve ambiguity in human visual system, modeling context within or among facial images emerges as a promising approach for AU recognition task. To this end, we propose CaFGraph, a novel context-aware facial multi-graph that can model both morphological & muscular-based region-level local context and region-level temporal context.

Cross-Modal Representation Learning for Lightweight and Accurate Facial Action Unit Detection

Yingjie Chen, Han Wu, Tao Wang, Yizhou Wang, Yun Liang

- The dynamic process of facial muscle movement, as the core feature of AU, is yet ignored and rarely exploited by prior studies. Based on such observation, we propose Flow Supervised Module (FSM) to explicitly capture the dynamic facial movement in the form of Flow and use the learned Flow to provide supervision signals for the detection model during the training stage effectively and efficiently.

🎖 Honors and Awards

- UBIQuant Scholarship, PKU, 2023

- Peking University President Scholarship, 2022

- PKU Triple-A Student Pacesetter Award, 2021

- Outstanding Graduates, Beijing, 2019

- Outstanding Graduates, PKU, 2019

- Top 10 Excellent Graduation Thesis, 2019

- PKU Triple-A Student Award, 2018

- National Scholarship, 2018

- PKU Triple-A Student Award, 2017

- Scholarship of Kwang-Hua Education Foundation, 2017

- PKU Triple-A Student Award, 2016

- Scholarship of Tianchuang, 2016

💻 Work Experiences

- 2024.7 - Present, AI Reasercher at Alibaba TongYi Lab.

- 2023.7 - 2023.10, Research Intern at Tencent.

- 2023.2 - 2023.6, Research Intern at Apple Inc..

- 2021.5 - 2022.7, Research Intern at Alibaba Damo Academy.

- 2020.11 - 2021.2, Research Intern at SenseTime.

- 2018.8 - 2019.2, Research Intern at MSRA.